By Dan Hill, Product Lead, Airbnb — How much should you charge someone to live in your house? Or how much would you pay to live in someone else’s house? Would you pay more or less for a planned vacation or for a spur-of-the-moment getaway?

Answering these questions isn’t easy. And the struggle to do so, my colleagues and I discovered, was preventing potential rentals from getting listed on our site—Airbnb, the company that matches available rooms, apartments, and houses with people who want to book them.

In focus groups, we watched people go through the process of listing their properties on our site—and get stumped when they came to the price field. Many would take a look at what their neighbors were charging and pick a comparable price; this involved opening a lot of tabs in their browsers and figuring out which listings were similar to theirs. Some people had a goal in mind before they signed up, maybe to make a little extra money to help pay the mortgage or defray the costs of a vacation. So they set a price that would help them meet that goal without considering the real market value of their listing. And some people, unfortunately, just gave up.

Clearly, Airbnb needed to offer people a better way—an automated source of pricing information to help hosts come to a decision. That’s why we started building pricing tools in 2012 and have been working to make them better ever since. This June, we released our latest improvements. We started doing dynamic pricing—that is, offering new price tips daily based on changing market conditions. We tweaked our general pricing algorithms to consider some unusual, even surprising characteristics of listings. And we’ve added what we think is a unique approach to machine learning that lets our system not only learn from its own experience but also take advantage of a little human intuition when necessary.

In the online world, a number of companies use algorithms to set or suggest prices. eBay, for example, tells you what similar products have sold for and lets you choose a price based on that information. eBay’s pricing problem was relatively simple to solve: It didn’t matter where the sellers and buyers were or whether you’re selling the product today or next week. Meanwhile, over at Uber and Lyft, the ride-sharing companies, geography and time do matter—but these two companies simply set prices by decree; there is no user choice or need for transparency in how the prices are determined.

At Airbnb, we faced an unusually complex problem. Every one of the million-plus listings on our site is unique, having its own address, size, and decor. Our hosts also vary in their willingness to play concierge, cook, or tour guide. And events—some regular, like seasonal weather changes; others unusual, like large local events—muddy the waters even further.

Three years ago we started building a tool to provide price tips to potential hosts based on the most important characteristics about a listing, like the number of rooms and beds, the neighboring properties, and certain amenities, like a parking space or even a pool. We rolled it out in 2013, and it did well, for the most part. But it had limitations. For one, the way its price-setting algorithms worked didn’t change. If we set them to consider that the Pearl District in Portland, Ore., say, had a certain boundary, or that rooms on a river were always worth a certain amount more than rooms a block from that river, the algorithm would apply those metrics forever, unless we went in manually to change them. And our pricing tools weren’t dynamic—price tips didn’t adjust based on when you were booking a room or how many other people seemed to be booking rooms at the same time.

Since mid-2014, we’ve been trying to change that. We wanted to build a tool that learns from its mistakes and improves by interacting with users. We also wanted the tool to adjust to demand and, when necessary, drop price tips to fill rooms that would otherwise stay empty or raise them in response to demand. We’ve started to figure that out, and we began to let our hosts use this new tool in June. We’ll tell you about how these tools evolved and how they work today. We’ll also tell you why we think our latest tool, Aerosolve, will eventually do a lot more than just price home rentals. That’s why we’re releasing it into the open-source community.

To get an idea of the problem we faced, consider three different situations.

Imagine you had lived in Brazil during the last football (soccer) World Cup. Your hometown will see a huge influx of travelers from all over the world, all united by the greatest football tournament on the planet. You have a spare room in your house, and you want to meet other football lovers and make some extra cash.

For our tool to help you figure out a price, there were a few factors to consider. First, this was a once-in-a-generation event in that country, so we at Airbnb have absolutely no historical data to look at. Second, every hotel was sold out, so clearly there was a massive imbalance between supply and demand. Third, the people coming to visit already had paid immense sums for their tickets and international travel, so they’d probably be prepared to pay a lot for a room. All of that had to be considered in addition to the obvious parameters of size, number of rooms, and location.

For our tool to help you figure out a price, there were a few factors to consider. First, this was a once-in-a-generation event in that country, so we at Airbnb have absolutely no historical data to look at. Second, every hotel was sold out, so clearly there was a massive imbalance between supply and demand. Third, the people coming to visit already had paid immense sums for their tickets and international travel, so they’d probably be prepared to pay a lot for a room. All of that had to be considered in addition to the obvious parameters of size, number of rooms, and location.

Or imagine you’ve inherited a castle in the Highlands of Scotland and, in order to pay the costs of cleaning the moats, operating the distillery, and feeding the falcons, you decide to turn the turret into a bed-and-breakfast. Unlike the World Cup situation, you’d have some comparative data, based on nearby castles. Some of that data would likely span many years, providing information about the seasonality of tourism and travel. And you’d know, because there are a number of other accommodation options in the area, that the supply and demand for tourist rooms is pretty balanced right now. Yet this particular castle is the only one in Scotland with a uniquely designed double moat. How can a system calculate what this rare and unique feature would be worth?

As a final example, imagine you own a typical two-bedroom apartment in Paris. You’re taking a few weeks of your August vacation and heading south to Montpellier. There are lots of comparative properties, so it’s relatively easy to price. But say, after you receive a wave of interest based on the first listing, you decide to start increasing the price gradually to try to maximize the amount earned. That’s a tricky proposition—what happens if you go too high, or cut it too close to the booking date and lose the chance to make any money? Or perhaps the opposite occurs: You take the first inquiry at a lower price and spend the next few month

s wishing you’d been brave enough to take a little more risk. How do we help hosts get better information to prevent this kind of uncertainty and regret?

These are the kinds of questions we faced. We wanted to build an easy-to-use tool to feed hosts information that is helpful as they decide what to charge for their spaces, while making the reasons for its pricing tips clear.

The overall architecture of our tool was surprisingly simple to figure out: When a new host begins adding a space to our site, our system extracts what we call the key attributes of that listing, looks at other listings with the same or similar attributes in the area, finds those that are being successfully booked, factors in demand and seasonality, and bases a price tip from the median there.

The tricky part began when we tried to figure out what, exactly, the key attributes of a listing are. No two listings are the same in design or layout, there are listings in every corner of a city, and many aren’t just apartments or houses but castles and igloos. We decided that our tool would use three major types of data in setting prices: similarity, recency, and location.

For data on similarity, we started with all the quantifiable attributes we know about a listing and then looked to see which were most highly correlated with the price a guest would pay for that listing. We arrived at how many people the space sleeps, whether it’s an entire property or a private room, the type of property (apartment, castle, yurt), and the number of reviews.

Perhaps the most surprising attribute here is the number of reviews. It turns out that people are willing to pay a premium for places with many reviews. While Amazon, eBay, and others do rely on reviews to help users make selections about what to buy or whom to buy it from, it’s not clear that the number of reviews makes a big difference in price. For us, having even a single review rather than no reviews makes a huge difference to a listing.

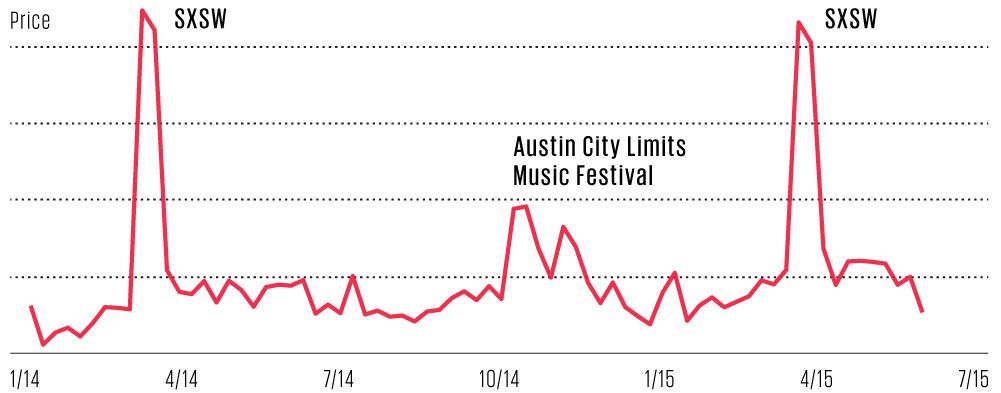

We considered recency, because markets change frequently, especially in travel. On top of that, travel is a highly seasonal business, so it’s important to look for the market rate either as it is today, or as it was this time last year; last month may not be relevant.

In highly developed markets like London or Paris, obtaining this market data is easy enough—there are thousands of listings being booked on our site to compare with. For new and emerging markets, we classified them into groups of similar size, level of tourism, and stage of growth for Airbnb. This way, we are able to compare listings not only in the actual city a space is in but also in other markets with similar characteristics. So if a Japanese host is one of the first Airbnb users to list an apartment in Kyoto, we might look at data from Tokyo or Okayama, back when Airbnb was similarly new in those cities, or from Amsterdam, a more mature market for Airbnb but one with a similar size and level of tourism.

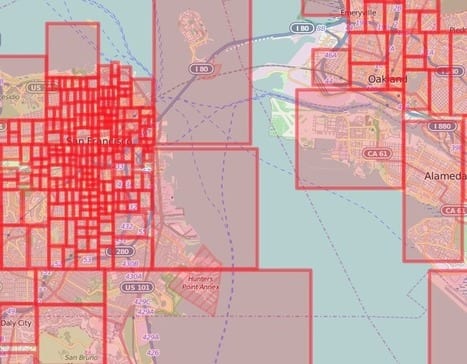

Finally, we needed to consider location, a rather different problem for us than for hotels. Hotels are typically grouped in just a few main locations; we have listings in almost every corner of a city.

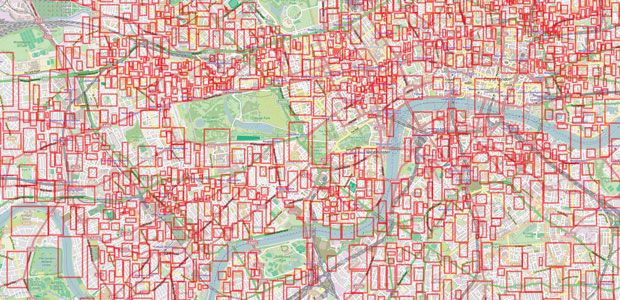

Early versions of our pricing algorithms plotted an expanding circle around a listing, considering similar properties at varying radii from the listing location. This seemed to work well for a while, but we eventually discovered a crucial flaw. Imagine our apartment in Paris for a minute. If the listing is centrally located, say, right by the Pont Neuf just down from the Louvre and Jardin des Tuileries, then our expanding circle quickly begins to encompass very different neighborhoods on opposite sides of the river. In Paris, though both sides of the Seine are safe, people will pay quite different amounts to stay in locations just a hundred meters apart. In other cities there’s an even sharper divide. In London, for instance, prices in the desirable Greenwich area can be more than twice as much as those near the London docks right across the Thames.

We therefore got a cartographer to map the boundaries of every neighborhood in our top cities all over the world. This information created extremely accurate and relevant geospatial definitions we could use to accurately cluster listings around major geographical and structural features like rivers, highways, and transportation connections.

So now, for example, for the first weekend of October, the price tip for a basic private room for two in London on the Greenwich side of the Thames comes up at US $130 a night; a room with similar attributes across the river comes up at $60 a night.

We were pretty happy with our algorithms—that is, after we fixed a bug that had caused our system to give a price tip of $99 on a large number of new listings, no matter what their particular characteristics. It didn’t happen for long, and not in every region, but we recognize that when this happens it may cause people to question whether our pricing tools are working.

We improved our algorithms over time until they were able to consider thousands of different factors and understand geographic location on a very detailed level. But the tool still had two weaknesses. The tips it gave were static—it understood local events and peak tourist seasons, and so would suggest different prices for the same property for different dates of the year. It didn’t, however, change those prices as the date approached, as airlines do, dropping prices when bookings were slow and raising them when the market heated up.

And the tool itself was static. Its tips did improve somewhat as it tapped into ever more historical data, but the algorithms themselves didn’t get better.

Last summer, we started a project to address both of these problems. On the dynamic pricing side, our goal was to give each host a new pricing tip every day for each date in the future the property is available for booking. Dynamic pricing isn’t new. Airlines began applying it several decades ago, adjusting prices—often in real time—to try and ensure maximum occupancy and maximum revenue per seat. Hotels followed suit as consolidation made the large chains larger, bringing them an ever-increasing amount of data about their business, and hotel marketing moved online, allowing the chains to change prices multiple times a day.

So investing in dynamic pricing—once we had several years of historical data about a large number of properties to tap—made a lot of sense for us despite the fact that it requires more computing resources.

Making the algorithms improve themselves over time was harder, particularly because we wanted our system to allow humans to easily interpret, and in some cases influence, the computer’s “thought process” as it did so. Machine-learning systems of the size and complexity required to handle our needs often work in mysterious ways. The Google Brain that learned to find cat videos on the Web, for example, has layers upon layers of algorithms that classify data, and the way it gets to its conclusions—cat video or not—is virtually impossible for a human to replicate.

We selected a machine-learning model called a classifier. It uses all of the attributes of a listing and prevailing market demand and then attempts to classify whether it will get booked or not. Our system calculates price tips based on hundreds of attributes, such as whether breakfast is included and whether the guest gets a private bath. Then we began training the system by having it check price tips against outcomes. Considering whether or not a listing gets booked at a particular price helps the system hone its price tips and estimate the probability of a price being accepted. Our hosts, of course, can choose to go higher or lower than the price tip, and then our system adjusts its estimate of likelihood accordingly. It later checks back on the fate of the listing and uses this information to adjust future tips.

Here’s where the learning comes in. With knowledge about the success of its tips, our system began adjusting the weights it gives to the different characteristics about a listing—the “signals” it is getting about a particular property. We started out with some assumptions, such as that geographic location is hugely important but that usually the presence of a hot tub is less so. We’ve retained certain attributes of a listing considered by our previous pricing system, but we’ve added new ones. Some of the new signals, like “number of lead days before booking day,” are related to our dynamic pricing capability. We added other signals simply because our analysis of historical data indicated that they matter.

For instance, certain photos are more likely to lead to bookings. The general trend might surprise you—the photos of stylish, brightly lit living rooms that tend to be preferred by professional photographers don’t attract nearly as many potential guests as photos of cozy bedrooms decorated in warm colors.

As time goes on, we expect constant automatic refinements of the weights of these signals to improve our price tips.

We can also go in and influence the weighting if we believe we know something that the model has yet to figure out. Our system can produce a list of factors and weights considered for each price tip, which we have our people looking at. If we think something isn’t well represented, we will add another signal manually to the model.

For example, we know that a listing in Seattle without Wi-Fi access to a broadband Internet connection is extremely unlikely to get booked at any price, so we don’t have to wait for our system to figure it out. We can adjust that metric ourselves.

Our system also constantly adjusts our maps to reflect changes in neighborhood boundaries. So instead of relying on local maps to tell us, say, where Portland’s Sunnyside neighborhood ends and Richmond begins, we are relying on the data on bookings and price differentials within a city to draw those kinds of lines. This approach also lets us spot microneighborhoods that we were not previously aware of. Such areas may have a large number of popular listings that don’t necessarily map to standard neighborhood boundaries, or there may be some local feature that makes a small section of a larger traditional neighborhood more desirable.

These tools are generating price tips for Airbnb properties globally today. But we think it can do a lot more than just better inform potential hosts as they choose prices for their online rentals. That’s why we’ve released the machine-learning platform on which it’s based, Aerosolve, as an open-source tool. It will give people in industries that have yet to embrace machine learning an easy entry point. By clarifying what the system is doing, it will remove the fear factor and increase the adoption of these kinds of tools. So far, we’ve used it to build a system that produces paintings in a pointillist style. We’re eager to see what happens with this tool as creative engineers outside of our industry start using it.

This article originally appeared in print as “How Much Is Your Spare Room Worth?.”

About the Author

Dan Hill, product lead at Airbnb, wrote the lodging-rental website’s pricing algorithm. He also cofounded Crashpadder, a home-sharing company acquired by Airbnb in 2012. Hill first did Web development to support his career as a violinist. “I sort of woke up one day and realized I hadn’t really been focused too much on [the violin],” he said in a recent interview. His next thought? “I really want to spend my life working on technology and products.”

Comments are closed.